Retrieval-augmented generation (RAG)

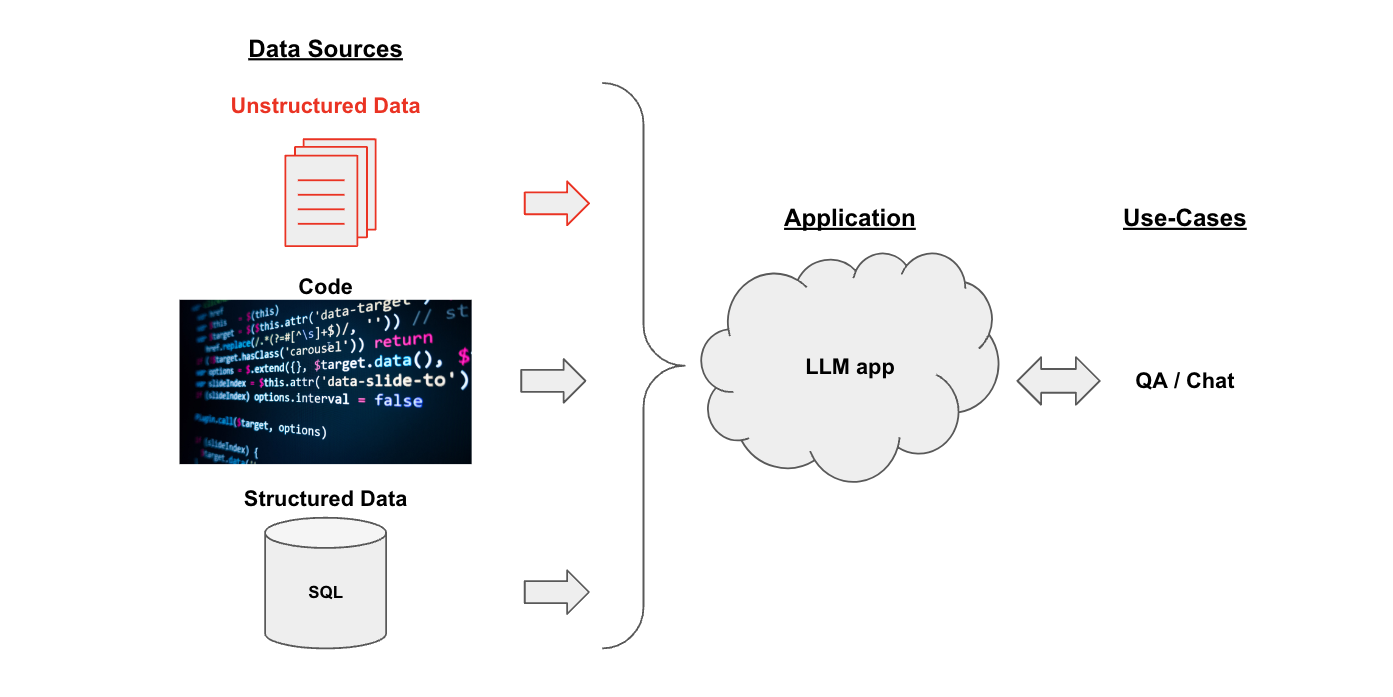

Use case

Suppose you have some text documents (PDF, blog, Notion pages, etc.) and want to ask questions related to the contents of those documents.

LLMs, given their proficiency in understanding text, are a great tool for this.

In this walkthrough we'll go over how to build a question-answering over documents application using LLMs.

Two very related use cases which we cover elsewhere are:

- QA over structured data (e.g., SQL)

- QA over code (e.g., Python)

Overview

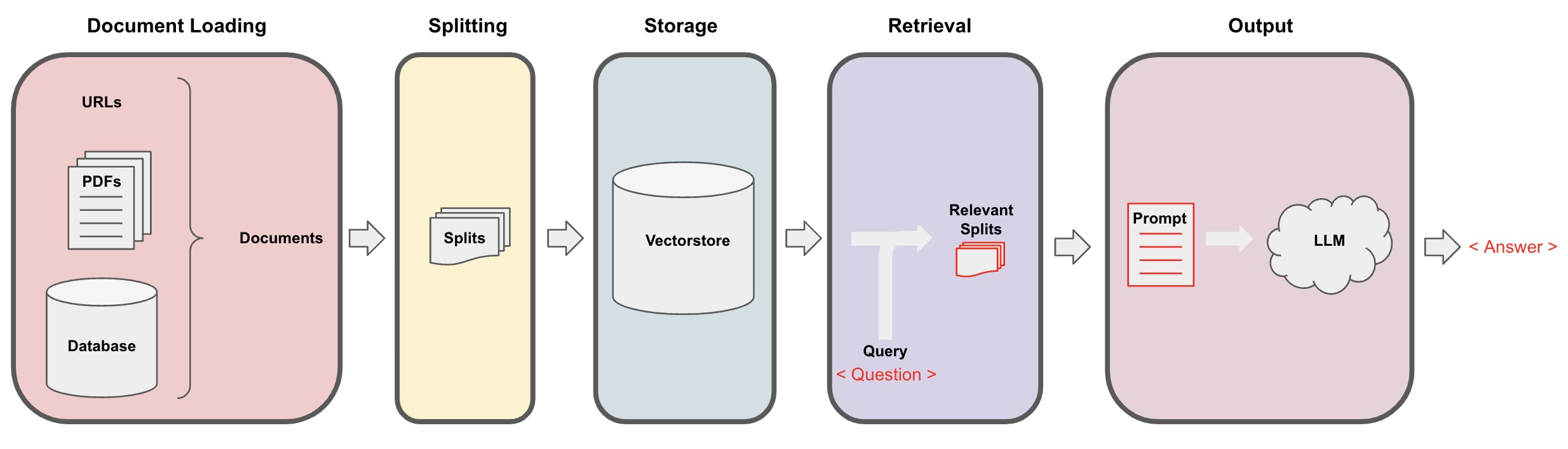

The pipeline for converting raw unstructured data into a QA chain looks like this:

Loading: First we need to load our data. Use the LangChain integration hub to browse the full set of loaders.Splitting: Text splitters breakDocumentsinto splits of specified sizeStorage: Storage (e.g., often a vectorstore) will house and often embed the splitsRetrieval: The app retrieves splits from storage (e.g., often with similar embeddings to the input question)Generation: An LLM produces an answer using a prompt that includes the question and the retrieved data

Quickstart

Suppose we want a QA app over this blog post.

We can create this in a few lines of code.

First set environment variables and install packages:

pip install langchain openai chromadb langchainhub

# Set env var OPENAI_API_KEY or load from a .env file

# import dotenv

# dotenv.load_dotenv()

# Load documents

from langchain.document_loaders import WebBaseLoader

loader = WebBaseLoader("https://lilianweng.github.io/posts/2023-06-23-agent/")

# Split documents

from langchain.text_splitter import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=0)

splits = text_splitter.split_documents(loader.load())

# Embed and store splits

from langchain.vectorstores import Chroma

from langchain.embeddings import OpenAIEmbeddings

vectorstore = Chroma.from_documents(documents=splits, embedding=OpenAIEmbeddings())

retriever = vectorstore.as_retriever()

# Prompt

# https://smith.langchain.com/hub/rlm/rag-prompt

from langchain import hub

rag_prompt = hub.pull("rlm/rag-prompt")

# LLM

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI(model_name="gpt-3.5-turbo", temperature=0)

# RAG chain

from langchain.schema.runnable import RunnablePassthrough

rag_chain = {"context": retriever, "question": RunnablePassthrough()} | rag_prompt | llm

rag_chain.invoke("What is Task Decomposition?")

AIMessage(content='Task decomposition is the process of breaking down a task into smaller subgoals or steps. It can be done using simple prompting, task-specific instructions, or human inputs.')

Here is the LangSmith trace for this chain.

Below we will explain each step in more detail.

Step 1. Load

Specify a DocumentLoader to load in your unstructured data as Documents.

A Document is a dict with text (page_content) and metadata.

from langchain.document_loaders import WebBaseLoader

loader = WebBaseLoader("https://lilianweng.github.io/posts/2023-06-23-agent/")

data = loader.load()

Go deeper

Step 2. Split

Split the Document into chunks for embedding and vector storage.

from langchain.text_splitter import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=0)

all_splits = text_splitter.split_documents(data)

Go deeper

DocumentSplittersare just one type of the more genericDocumentTransformers.- See further documentation on transformers here.

Context-aware splitterskeep the location ("context") of each split in the originalDocument:

Step 3. Store

To be able to look up our document splits, we first need to store them where we can later look them up.

The most common way to do this is to embed the contents of each document split.

We store the embedding and splits in a vectorstore.

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import Chroma

vectorstore = Chroma.from_documents(documents=all_splits, embedding=OpenAIEmbeddings())

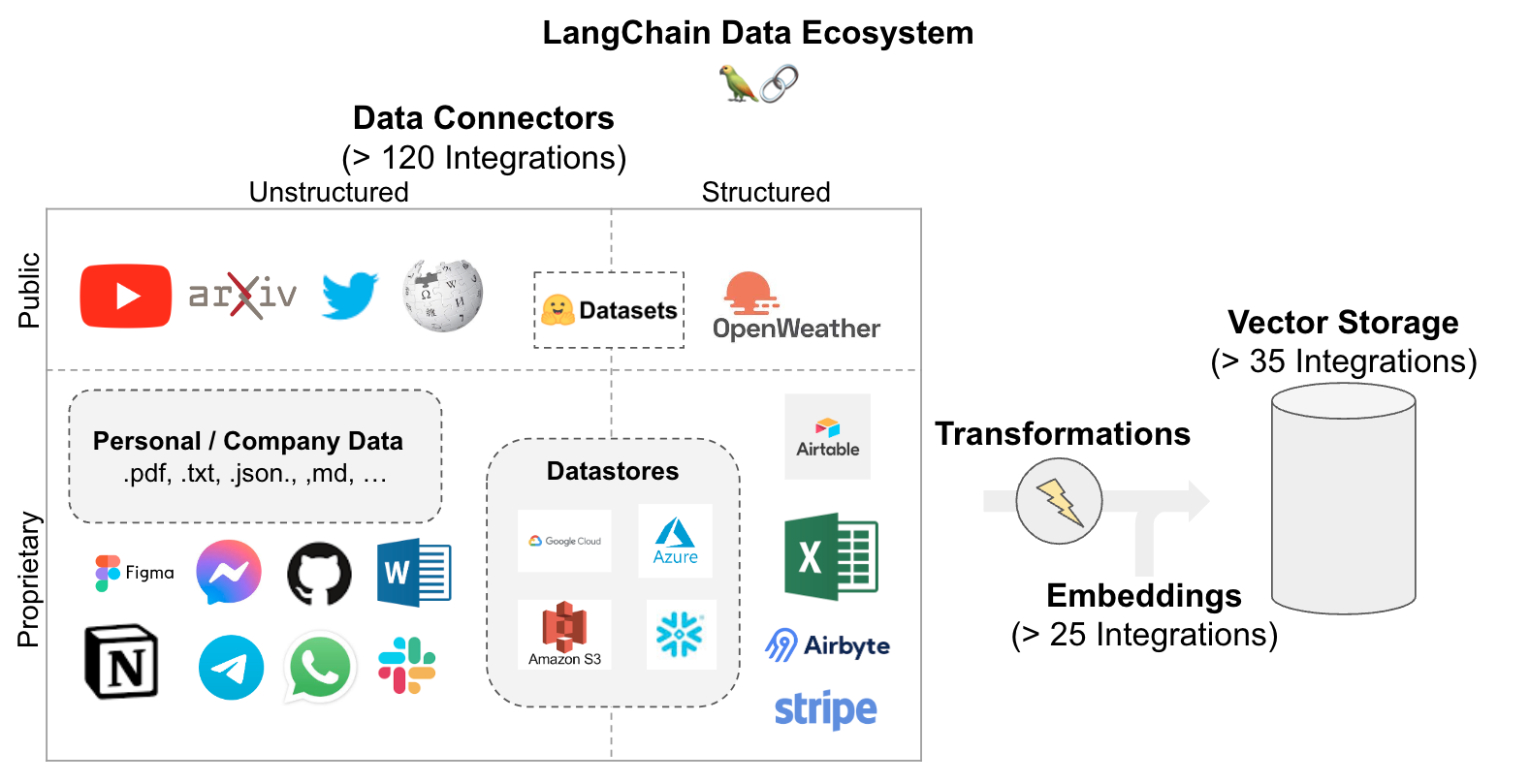

Go deeper

Browse the > 40 vectorstores integrations here.

See further documentation on vectorstores here.

Browse the > 30 text embedding integrations here.

See further documentation on embedding models here.

Here are Steps 1-3:

Step 4. Retrieve

Retrieve relevant splits for any question using similarity search.

This is simply "top K" retrieval where we select documents based on embedding similarity to the query.

question = "What are the approaches to Task Decomposition?"

docs = vectorstore.similarity_search(question)

len(docs)

4

Go deeper

Vectorstores are commonly used for retrieval, but they are not the only option. For example, SVMs (see thread here) can also be used.

LangChain has many retrievers including, but not limited to, vectorstores.

All retrievers implement a common method get_relevant_documents() (and its asynchronous variant aget_relevant_documents()).

from langchain.retrievers import SVMRetriever

svm_retriever = SVMRetriever.from_documents(all_splits, OpenAIEmbeddings())

docs_svm = svm_retriever.get_relevant_documents(question)

len(docs_svm)

4

Some common ways to improve on vector similarity search include:

MultiQueryRetrievergenerates variants of the input question to improve retrieval.Max marginal relevanceselects for relevance and diversity among the retrieved documents.- Documents can be filtered during retrieval using

metadatafilters.

import logging

from langchain.chat_models import ChatOpenAI

from langchain.retrievers.multi_query import MultiQueryRetriever

logging.basicConfig()

logging.getLogger("langchain.retrievers.multi_query").setLevel(logging.INFO)

retriever_from_llm = MultiQueryRetriever.from_llm(

retriever=vectorstore.as_retriever(), llm=ChatOpenAI(temperature=0)

)

unique_docs = retriever_from_llm.get_relevant_documents(query=question)

len(unique_docs)

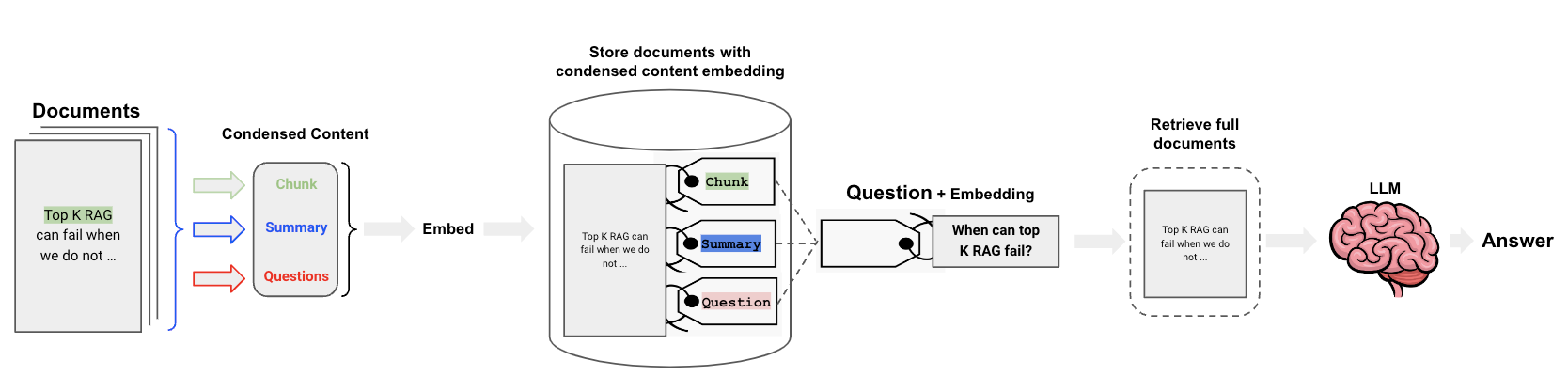

In addition, a useful concept for improving retrieval is decoupling the documents from the embedded search key.

For example, we can embed a document summary or question that are likely to lead to the document being retrieved.

See details in here on the multi-vector retriever for this purpose.

Step 5. Generate

Distill the retrieved documents into an answer using an LLM/Chat model (e.g., gpt-3.5-turbo).

We use the Runnable protocol to define the chain.

Runnable protocol pipes together components in a transparent way.

We used a prompt for RAG that is checked into the LangChain prompt hub (here).

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI(model_name="gpt-3.5-turbo", temperature=0)

from langchain.schema.runnable import RunnablePassthrough

rag_chain = {"context": retriever, "question": RunnablePassthrough()} | rag_prompt | llm

rag_chain.invoke("What is Task Decomposition?")

AIMessage(content='Task decomposition is the process of breaking down a task into smaller subgoals or steps. It can be done using simple prompting, task-specific instructions, or human inputs.')

Go deeper

Choosing LLMs

- Browse the > 90 LLM and chat model integrations here.

- See further documentation on LLMs and chat models here.

- See a guide on local LLMS here.

Customizing the prompt

As shown above, we can load prompts (e.g., this RAG prompt) from the prompt hub.

The prompt can also be easily customized, as shown below.

from langchain.prompts import PromptTemplate

template = """Use the following pieces of context to answer the question at the end.

If you don't know the answer, just say that you don't know, don't try to make up an answer.

Use three sentences maximum and keep the answer as concise as possible.

Always say "thanks for asking!" at the end of the answer.

{context}

Question: {question}

Helpful Answer:"""

rag_prompt_custom = PromptTemplate.from_template(template)

rag_chain = (

{"context": retriever, "question": RunnablePassthrough()} | rag_prompt_custom | llm

)

rag_chain.invoke("What is Task Decomposition?")

AIMessage(content='Task decomposition is the process of breaking down a complicated task into smaller, more manageable subtasks or steps. It can be done using prompts, task-specific instructions, or human inputs. Thanks for asking!')

We can use LangSmith to see the trace.